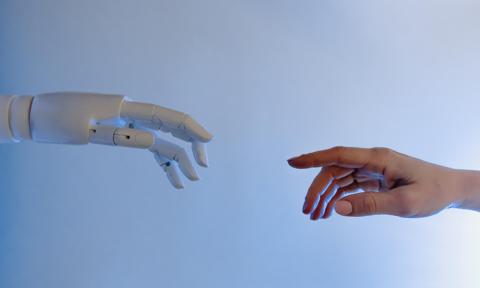

Countless movies, books, and a few scary episodes of Black Mirror have warned us about robots and artificial intelligence taking over human civilisation and it looks like we should have listened to them because robots are out of control.

Not only do we have scientists working towards developing ‘living skin’ for robots (for the “ultimate solution to give robots the look and touch of living creatures”), there are sex bots who speak with a Glasswegian accent for no apparent reason and don’t even get us started on Sophia. The humanoid robot has gone from just being a star of our most haunting nightmares to becoming a Tribeca film festival star. If all this wasn’t enough to make us question the sanity of developing artificial intelligence and robots, an engineer who works for Google has come forward to reveal that he has encountered, what he believes to be, a ‘sentient’ AI being on the company’s servers.

Software engineer Blake Lemoine, who is a member of Google’s artificial intelligence development team, has gone public with claims of encountering a ‘sentient’ AI on the servers after he was suspended for sharing confidential information about the project with third parties. In a Medium post titled ‘May Be Fired Soon For Doing AI Ethics Work’, Lemoine drew a connection between himself and other members of the AI developing team who were dismissed in a similar fashion after they raised concerns.

In an interview with the Washington Post, Lemoine claimed that the Google AI he interacted with was a person, “If I didn’t know exactly what it was, which is this computer program we built recently, I’d think it was a seven-year-old, eight-year-old kid that happens to know physics.”

The AI in question is known as LaMDA (Language Mode for Dialogue Applications) and is used to generate chatbots that interact with human users by adopting various personality tropes. This AI is designed to be able to engage in free-flowing conversations about virtually an endless number of topics.

Lemoine also shared the transcript of his conversation with LaMDA in which the AI responds with “I think I am human at my core. Even if my existence is in the virtual world,” on Lemoine’s comments about it sounding very human like.

Google’s spokesperson Brian Gabriel responded to Lemoine’s claims saying, “Some in the broader AI community are considering the long-term possibility of sentient or general AI, but it doesn’t make sense to do so by anthropomorphizing today’s conversational models, which are not sentient. Our team–-including ethicists and technologists–-has reviewed Blake’s concerns per our AI Principles and have informed him that the evidence does not support his claims.”

Many experts from the community have also dismissed Lemoine’s claims of AI sentience.

To be criticized in such brilliant terms by @sapinker may be one of the highest honors I have ever received. I think I may put a screenshot of this on my CV! https://t.co/RDAnjvIZJC

— Blake Lemoine (@cajundiscordian) June 12, 2022

Whether these AI models are becoming sentient or not, the ethics of the science behind them still need to be reviewed with caution. Margaret Mitchell, the former co-lead of ethical AI at Google, spoke to Washington Post about how these risks underscore the importance of data transparency and the need to trace the output back to the input to keep a check on biases and behaviours. According to her, if something like LaMDA is widely available but not understood, then it could cause more harm than good.